|

Hao Zhou 周浩

I am currently in my third year of Ph.D. studies at PennState, under the guidance of Prof. Mahanth Gowda. I was also fortunate to receive mentorship from Prof. Jie Xiong during my internships at Microsoft Research Asia. My research interests span across wireless sensing, wearable computing, and multimodal learning. "Brevity is the soul of wit" -- William Shakespeare I am looking for research internships for summer 2024 (and fall 2024). CV / Google Scholar / Github / X / LinkedIn |

|

| News · Publications · Services · Awards & Honors |

[2023-11] Received Penn State International Travel Grant. [2023-09] Received Student Travel Grant from MobiCom'23. [2023-08] SignQuery is accepted to MobiCom'23. [2023-05] OmniRing won Best Paper Award for Edge IoT AI. [2023-04] I will be an intern at Microsoft Research Asia! [2023-01] OmniRing is accepted to IoTDI 2023. [2022-09] Received an outstanding TA award! Thanks everyone! [2022-04] ssLOTR is accepted to IMWUT 2022. [2021-10] DACHash won the Best Paper Award. [2021-08] DACHash is accepted to SBAC-PAD 2021. [2021-07] I started Ph.D. at PennState. [2020-08] My first paper is accepted to AI4I 2020. |

|

abstract /

bibtex /

slides

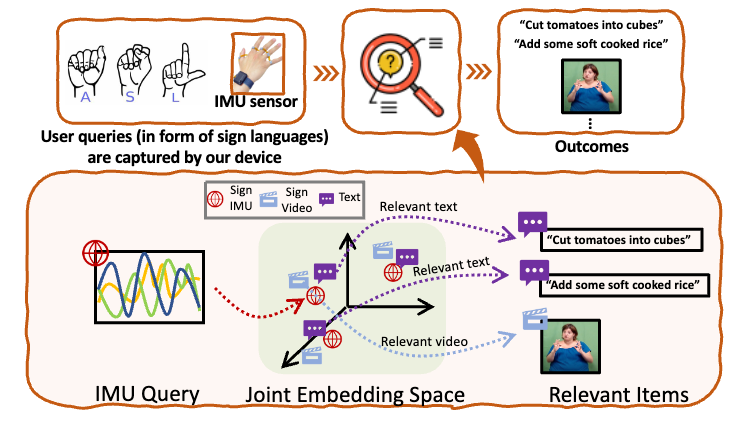

Search Engines such as Google, Baidu, and Bing have revolutionized the way we interact with the cyber world with a number of applications in recommendations, learning, advertisements, healthcare, entertainment, etc. In this paper, we design search engines for sign languages such as American Sign Language (ASL). Sign languages use hand and body motion for communication with rich grammar, complexity, and vocabulary that is comparable to spoken languages. This is the primary language for the Deaf community with a global population of ≈ 500 million. However, search engines that support sign language queries in native form do not exist currently. While translating a sign language to a spoken language and using existing search engines might be one possibility, this can miss critical information because existing translation systems are either limited in vocabulary or constrained to a specific domain. In contrast, this paper presents a holistic approach where ASL queries in native form as well as ASL videos and textual information available online are converted into a common representation space. Such a joint representation space provides a common framework for precisely representing different sources of information and accurately matching a query with relevant information that is available online. Our system uses low-intrusive wearable sensors for capturing the sign query. To minimize the training overhead, we obtain synthetic training data from a large corpus of online ASL videos across diverse topics. Evaluated over a set of Deaf users with native ASL fluency, the accuracy is comparable with state-of-the-art recommendation systems for Amazon, Netflix, Yelp, etc., suggesting the usability of the system in the real world. For example, the recall@10 of our system is 64.3%, i.e., among the top ten search results, six of them are relevant to the search query. Moreover, the system is robust to variations in signing patterns, dialects, sensor positions, etc. |

|

abstract /

bibtex

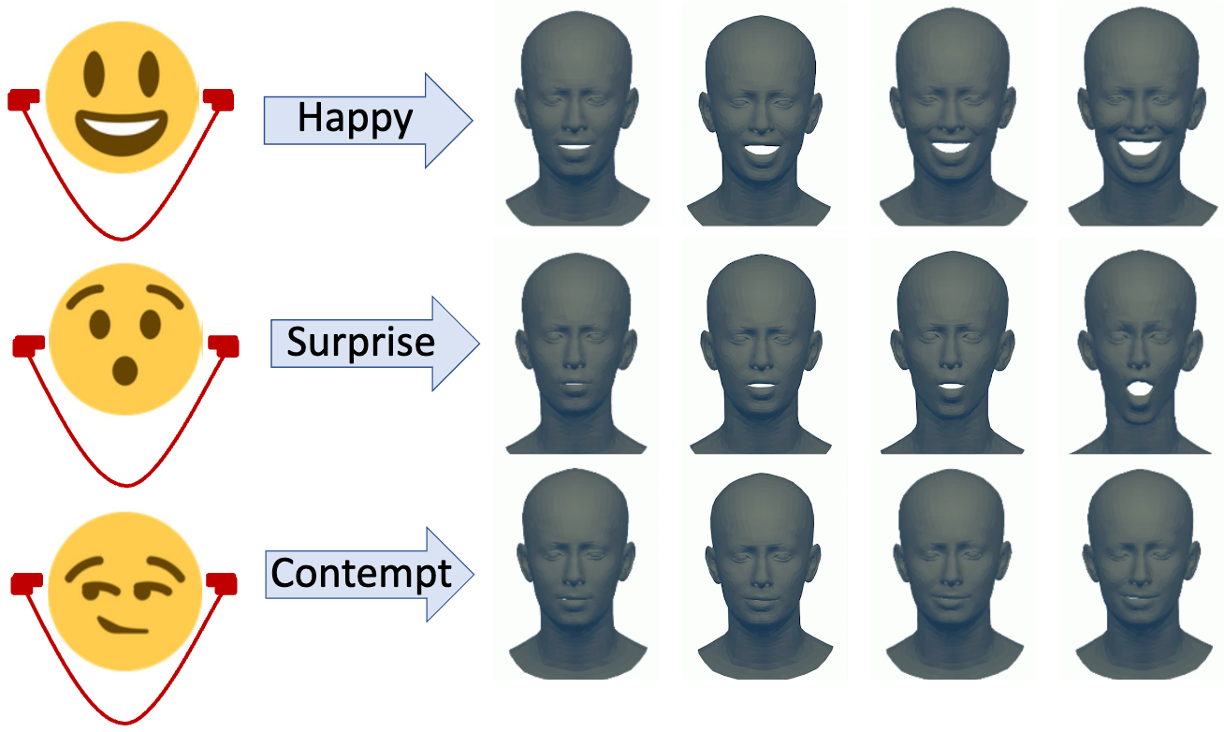

This paper presents EARFace, a system that shows the feasibility of tracking facial landmarks for 3D facial reconstruction using in-ear acoustic sensors embedded within smart earphones. This enables a number of applications in the areas of facial expression tracking, user-interfaces, AR/VR applications, affective computing, accessibility, etc. While conventional vision-based solutions break down under poor lighting, occlusions, and also suffer from privacy concerns, earphone platforms are robust to ambient conditions, while being privacy-preserving. In contrast to prior work on earable platforms that perform outer-ear sensing for facial motion tracking, EARFace shows the feasibility of completely in-ear sensing with a natural earphone form-factor, thus enhancing the comfort levels of wearing. The core intuition exploited by EARFace is that the shape of the ear canal changes due to the movement of facial muscles during facial motion. EARFace tracks the changes in shape of the ear canal by measuring ultrasonic channel frequency response (CFR) of the inner ear, ultimately resulting in tracking of the facial motion. A transformer based machine learning (ML) model is designed to exploit spectral and temporal relationships in the ultrasonic CFR data to predict the facial landmarks of the user with an accuracy of 1.83 mm. Using these predicted landmarks, a 3D graphical model of the face that replicates the precise facial motion of the user is then reconstructed. Domain adaptation is further performed by adapting the weights of layers using a group-wise and differential learning rate. This decreases the training overhead in EARFace. The transformer based ML model runs on smartphone devices with a processing latency of 13 ms and an overall low power consumption profile. Finally, usability studies indicate higher levels of comforts of wearing EARFace’s earphone platform in comparison with alternative form-factors. |

|

project page /

abstract /

bibtex

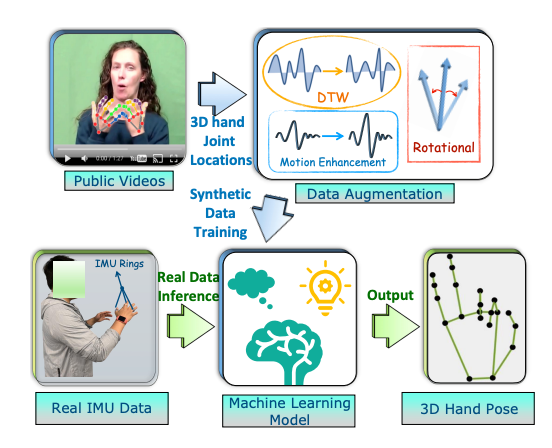

This paper presents OmniRing, an open-source smartring platform with IMU and PPG sensors for activity tracking and health analytics applications. Smartring platforms are on the rise because of comfortable wearing, with the market size expected to reach $92 million soon. Nevertheless, most existing platforms are either commercial and proprietary without details of software/hardware or use suboptimal PCB design resulting in bulky form factors, inconvenient for wearing in daily life. Towards bridging the gap, OmniRing presents an extensible design of a smartring with a miniature form factor, longer battery life, wireless communication, and water resistance so that users can wear it all the time. Towards this end, OmniRing exploits opportunities in SoC, and carefully integrates the sensing units with a microcontroller and BLE modules. The electronic components are integrated on both sides of a flexible PCB that is bent in the shape of a ring and enclosed in a flexible and waterproof case for smooth skin contact. The overall cost is under $25, with weight of 2.5g, and up to a week of battery life. Extensive usability surveys validate the comfort levels. To validate the sensing capabilities, we enable an application in 3D finger motion tracking. By extracting synthetic training data from public videos coupled with data augmentation to minimize the overhead of training data generation for a new platform, OmniRing designs a transformer-based model that exploits correlations across fingers and time to track 3D finger motion with an accuracy of 6.57𝑚𝑚. We also validate the use of PPG data from OmniRing for heart rate monitoring. We believe the platform can enable exciting applications in fitness tracking, metaverse, sports, and healthcare. |

|

abstract /

bibtex /

slides

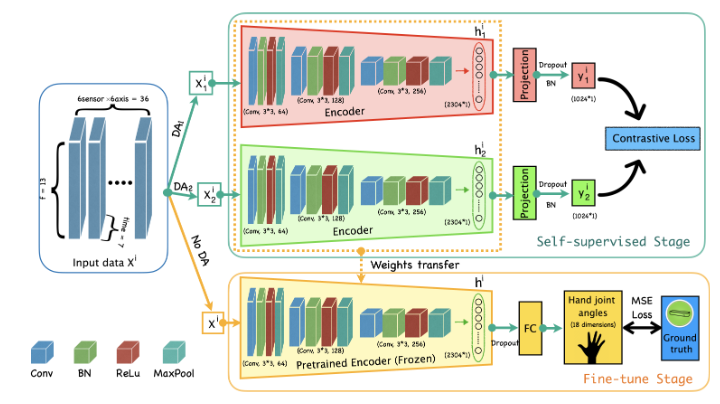

This paper presents ssLOTR (self-supervised learning on the rings), a system that shows the feasibility of designing self-supervised learning based techniques for 3D finger motion tracking using a custom-designed wearable inertial measurement unit (IMU) sensor with a minimal overhead of labeled training data. Ubiquitous finger motion tracking enables a number of applications in augmented and virtual reality, sign language recognition, rehabilitation healthcare, sports analytics, etc. However, unlike vision, there are no large-scale training datasets for developing robust machine learning (ML) models on wearable devices. ssLOTR designs ML models based on data augmentation and self-supervised learning to first extract efficient representations from raw IMU data without the need for any training labels. The extracted representations are further trained with small-scale labeled training data. In comparison to fully supervised learning, we show that only 15% of labeled training data is sufficient with self-supervised learning to achieve similar accuracy. Our sensor device is designed using a two-layer printed circuit board (PCB) to minimize the footprint and uses a combination of Polylactic acid (PLA) and Thermoplastic polyurethane (TPU) as housing materials for sturdiness and flexibility. It incorporates a system-on-chip (SoC) microcontroller with integrated WiFi/Bluetooth Low Energy (BLE) modules for real-time wireless communication, portability, and ubiquity. In contrast to gloves, our device is worn like rings on fingers, and therefore, does not impede dexterous finger motion. Extensive evaluation with 12 users depicts a 3D joint angle tracking accuracy of 9.07◦ (joint position accuracy of 6.55𝑚𝑚) with robustness to natural variation in sensor positions, wrist motion, etc, with low overhead in latency and power consumption on embedded platforms. |

|

abstract /

bibtex

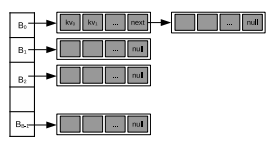

GPU acceleration of hash tables in high-volume transaction applications such as computational geometry and bio-informatics are emerging. Recently, several hash table designs have been proposed on GPUs, but our analysis shows that they still do not adequately factor in several important aspects of a GPU’s execution environment, leaving large room for further optimization. To that end, we present a dynamic, cache-aware, concurrent hash table named DACHash. It is specifically designed to improve memory efficiency and reduce thread divergence on GPUs. We propose several novel techniques including a GPU-friendly data structure & sizing, a reorder algorithm, and dynamic thread-data mapping schemes that make the operations of hash table more amendable to GPU architecture. Testing DACHash on an NVIDIA GTX 3090 achieves a peak performance of 8.65 billion queries/second in static searching and 5.54 billion operations/second in concurrent operation execution. It outperforms the state-of-the-art SlabHash by 41.53% and 19.92% respectively. We also verify that our proposed technique improves L2 cache bandwidth and L2 cache hit rate by 9.18× and 2.68× respectively. |

|

abstract /

bibtex /

related (dataset)

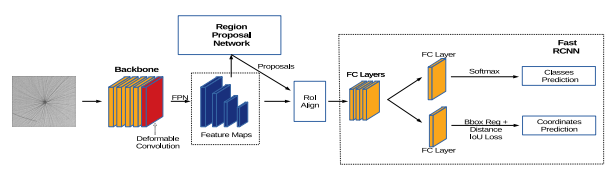

This paper presents a fabric defect detection network (FabricNet) for automatic fabric defect detection. Our proposed FabricNet incorporates several effective techniques, such as Feature Pyramid Network (FPN), Deformable Convolution (DC) network, and Distance IoU Loss function, into vanilla Faster RCNN to improve the accuracy and speed of fabric defect detection. Our experiment shows that, when optimizations are combined, the FabricNet achieves 62.07% mAP and 97.37% AP50 on DAGM 2007 dataset, and an average prediction speed of 17 frames per second. |

|

|

|

Last update on Nov 2023 and big shout out to Jon |