|

Hao Zhou

I am currently in my final year of Ph.D. studies at the Pennsylvania State University, advised by Prof. Mahanth Gowda. I was also fortunate to receive mentorship from Prof. Jie Xiong during my internship at Microsoft Research Asia. I have broad interests in AI-powered Mobile and Wearable Systems, Internet-of-Things and Cyber-Physical Systems, Human-Centric and Biomedical Sensing, and Representation Learning for Time-Series.

I am on the job market this year, and welcome any feedback! 😊 Bio / CV / Google Scholar / Github / LinkedIn |

|

| News · Publications · Services · Awards & Honors |

[2026-01] HiMAE (co-authored) is accepted to ICLR'26! [2026-01] MemoryAids is accepted to ICASSP'26. [2025-11] Invited to give a guest lecture for PSU EE 497. I will talk about my research on wearable technology. [2025-10] Our wearable foundation model work (w/ Samsung) is accepted to NeurIPS TS4H, and is submitted to ICLR 2026. [2025-05] My thesis has been accepted to MobiSys' 25 Rising Star. [2025-05] I will return to Samsung Research America for summer internship. [2025-03] Recipient of Penn State's Alumni Association Dissertation Award (Only recipient from School of EECS). News. [2025-01] One co-author paper from the internship at Samsung Research America got accepted to CHI'25. [2024-12] Two papers from the internship at Samsung Research America got accepted to ICASSP'25. [2024-10] Received Student Travel Grant from MobiCom'24. [2024-10] I will serve as a student voluteer at MobiCom'24. [2024-08] UWBOrient is accepted to MobiCom'24. See you in D.C.! [2024-05] I will be interning on the Digital Health team at Samsung Research America. [2024-03] ASLRing is accepted IoTDI 2024. [2023-11] I passed the comprehensive exam! [2023-11] Received Penn State International Travel Grant. [2023-08] SignQuery is accepted to MobiCom'23. [2023-05] OmniRing won Best Paper Award for Edge IoT. [2023-04] I will be an intern at Microsoft Research Asia! [2023-01] OmniRing is accepted to IoTDI 2023. [2022-09] Received an outstanding TA award! Thanks everyone! [2022-04] ssLOTR is accepted to IMWUT 2022. [2021-07] I started Ph.D. at Penn State. |

|

abstract /

bibtex

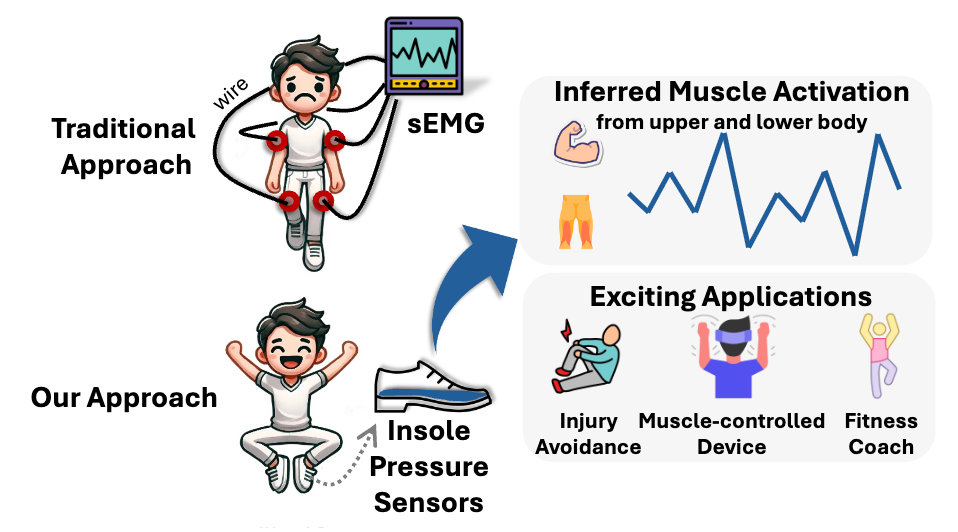

Muscle activation initiates contractions that drive human movement, and understanding it provides valuable insights for injury prevention and rehabilitation. Yet, sensing muscle activation is barely explored in the rapidly growing mobile health market. Traditional methods for muscle activation sensing rely on specialized electrodes, such as surface electromyography, making them impractical, especially in the long-term of free motion. In this paper, we introduce Press2Muscle, the first system to unobtrusively infer muscle activation using insole pressure sensors. The key idea is to analyze foot pressure changes resulting from full-body muscle activation that drives movements. To handle variations in pressure signals due to differences in users’ gait, weight, and movement styles, we propose a data-driven approach to dynamically adjust reliance on different foot regions and incorporate easily accessible biographical data to enhance Press2Muscle’s generalization to unseen users. We conducted an exten- sive study with 30 users. Under a leave-one-user-out setting, Press2Muscle achieves a root mean square error of 0.025, marking a 19% improvement over a video-based counterpart. A robustness study validates Press2Muscle’s ability to generalize across user demographics, footwear types, and walking surfaces. Additionally, we showcase muscle imbalance detection and muscle activation estimation under free-living settings with Press2Muscle, confirming the feasibility of muscle activation sensing using insole pressure sensors in real-world settings. |

|

abstract /

bibtex

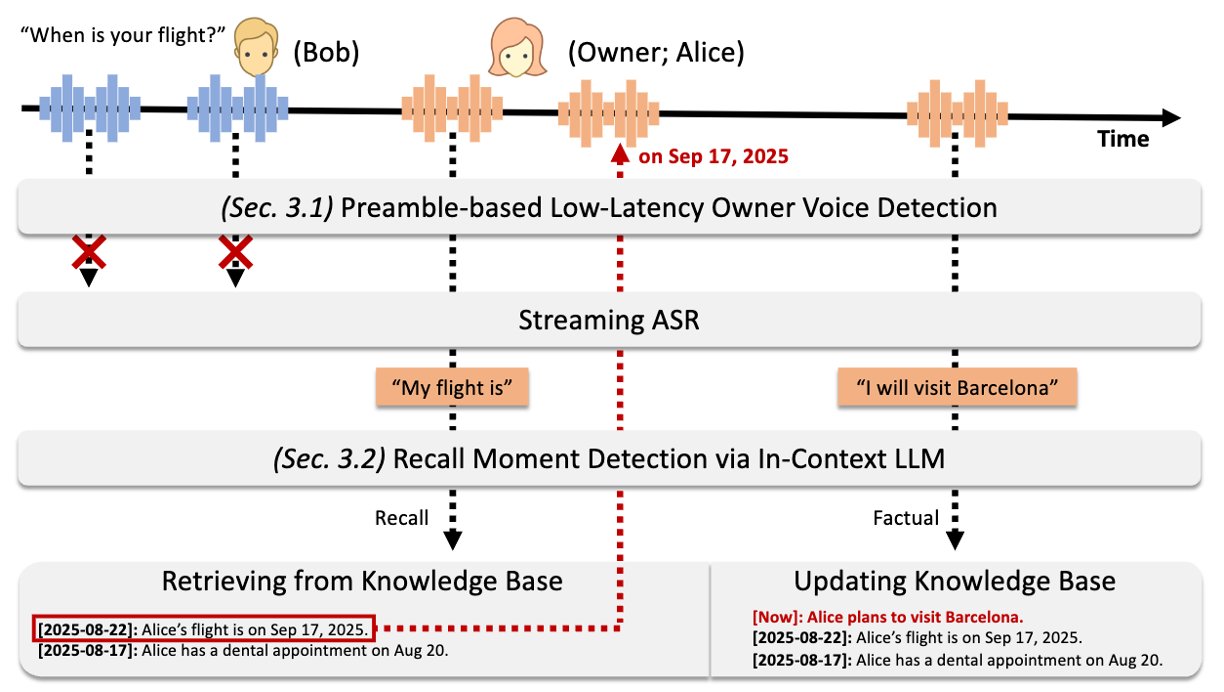

Timely recall of details is crucial for collaboration, decision-making, and planning, especially for people with dementia (57 million globally) or memory impairments (10% of adults over 65 have dementia and 22% have mild impairment in U.S.). Existing assistive technologies often rely on manual queries or lack awareness of conversational ownership, leading to irrelevant recall and privacy concerns, and limiting their effectiveness in dynamic, multi-party settings. In this paper, we introduce MemoryAids, a proactive voice memory assistant that seamlessly operates during live conversations. MemoryAids only focuses on owner speech, detects missing details in real time, and provides concise summaries by proposing a low-latency owner detection module and leveraging in-context learning. Our evaluations show accurate owner detection (a recall of 90.7%), recall moment detection (92.7% accuracy with 5.8% word error rate), and sub-second latency, highlighting its potential to benefit people with memory impairments. |

|

abstract /

bibtex

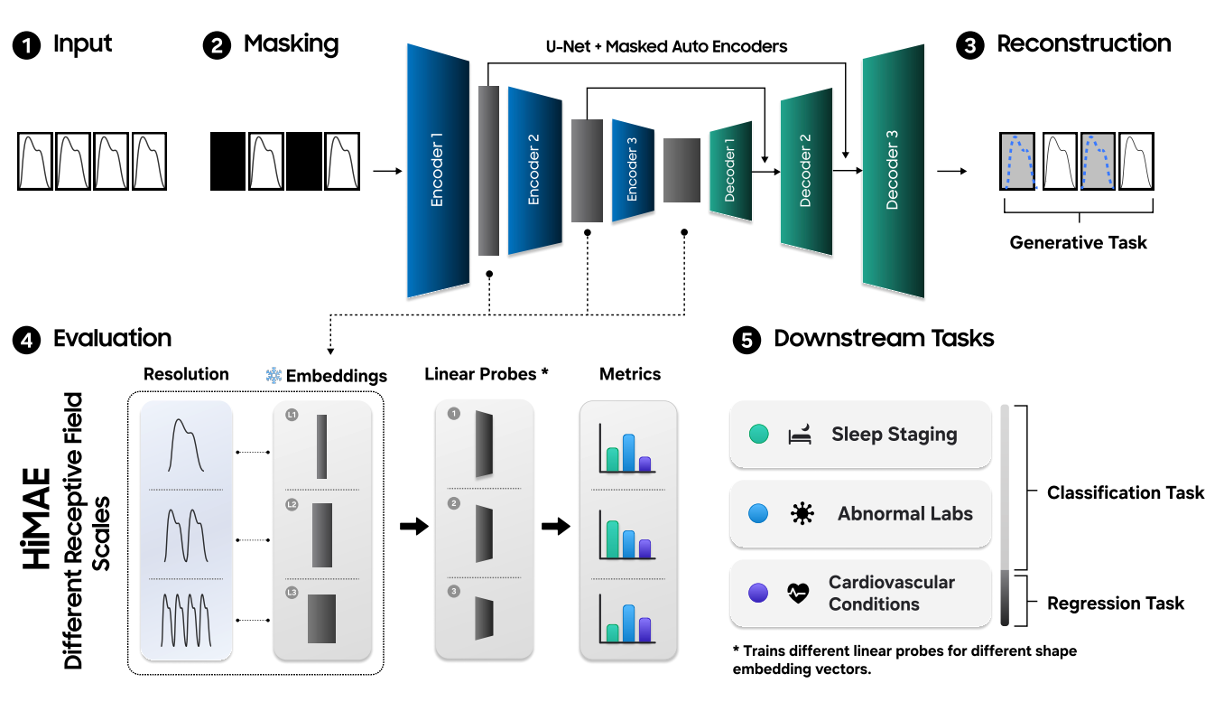

Wearable sensors provide abundant physiological time series, yet the principles governing their predictive utility remain unclear. We hypothesize that temporal resolution is a fundamental axis of representation learning, with different clinical and behavioral outcomes relying on structure at distinct scales. To test this resolution hypothesis, we introduce HiMAE (Hierarchical Masked Autoencoder), a self-supervised framework that combines masked autoencoding with a hierarchical convolutional encoder–decoder. HiMAE produces multi-resolution embeddings that enable systematic evaluation of which temporal scales carry predictive signal, transforming resolution from a hyperparameter into a probe for interpretability. Across classification, regression, and generative benchmarks, HiMAE consistently outperforms state-of-the-art foundation models that collapse scale, while being orders of magnitude smaller. HiMAE is an efficient representation learner compact enough to run entirely on-watch, achieving sub-millisecond inference on smartwatch-class CPUs for true edge inference. Together, these contributions position HiMAE as both an efficient self supervised learning method and a discovery tool for scale-sensitive structure in wearable health. |

|

abstract /

bibtex

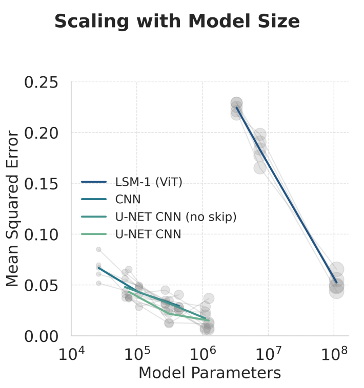

We propose a lightweight foundation model for wearable signals that leverages convolutional inductive biases within a masked autoencoder and U-Net CNN backbone. By explicitly encoding temporal locality and multi-scale structure, our approach aligns more naturally with the nonstationary dynamics of physiological waveforms than attention-based transformers. Pretrained on 80k hours of photoplethysmogram (PPG), the model matches or surpasses larger state-of-the-art baselines across ten clinical classification tasks. At the same time, it achieves two to three orders of magnitude reductions in parameters (0.31M vs. 110M), memory footprint (3.6MB vs. 441.3MB), and compute, while delivering substantial speedups (∼4× CPU, ∼20× GPU) with resolution flexibility. Together, these results establish compact convolutional self-supervised models as both scientifically aligned and practically scalable for potential real-time on-device healthcare monitoring |

|

|

|

abstract /

bibtex

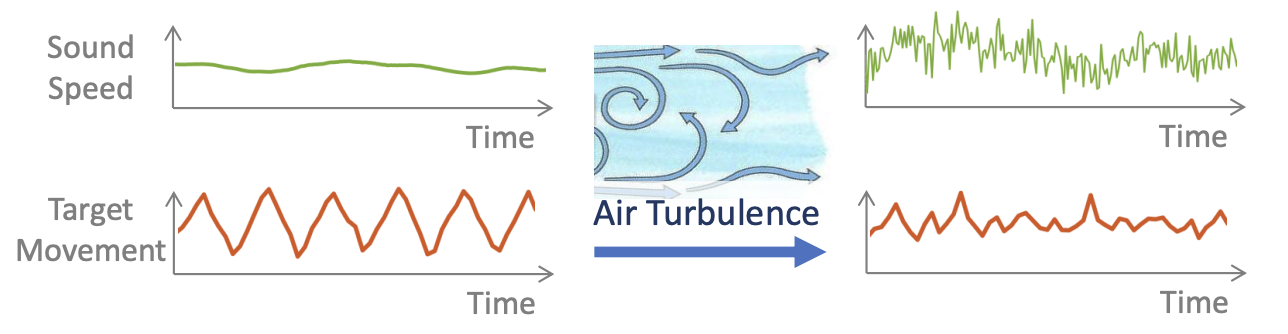

Acoustic sensing has recently garnered significant interest for a wide range of applications ranging from motion tracking to health monitoring. However, prior works overlooked an important real-world factor affecting acoustic sensing systems—the instability of the propagation medium due to ambient airflow. Airflow introduces rapid and random fluctuations in the speed of sound, leading to performance degradation in acoustic sensing tasks. This paper presents WindDancer, the first comprehensive framework to understand how ambient airflow influences existing acoustic sensing systems, as well as provides solutions to enhance systems performance in the presence of airflow. Specifically, our work includes a mechanistic understanding of airflow interference, modeling of sound speed variations, and analysis of how several key real-world factors interact with airflow. Furthermore, we provide practical recommendations and signal processing solutions to improve the resilience of acoustic sensing systems for real-world deployment. We envision that WindDancer establishes a theoretical foundation for understanding the impact of airflow on acoustic sensing, and advances the reliability of acoustic sensing technologies for broader adoption. |

|

abstract /

bibtex

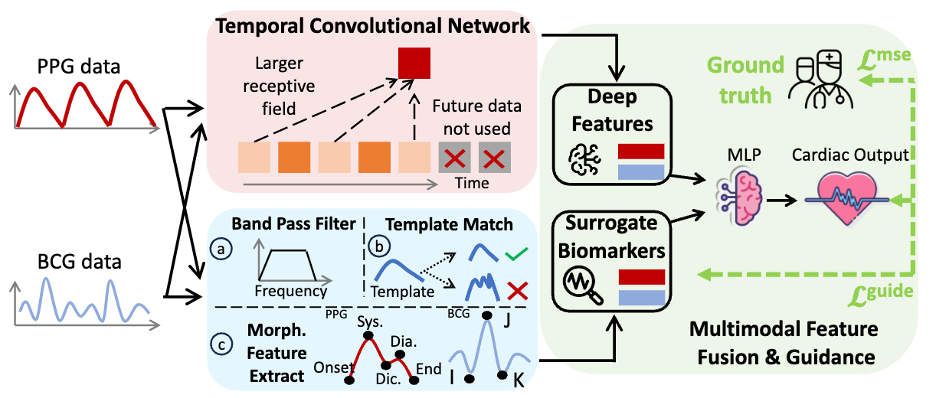

Cardiac Output (CO) is a critical indicator of health, offering insights into cardiac dysfunction, acute stress responses, and cognitive decline. Traditional CO monitoring methods, like impedance cardiography, are invasive and impractical for daily use, leading to a gap in continuous, non-invasive monitoring. Although recent advancements explored wearables on heart rate monitoring, these approaches face challenges in accurately estimating CO due to the indirect nature of the signals. To address these challenges, we introduce EarCO, a non-invasive multimodal CO monitoring system with Photoplethysmography and Ballistocardiogram signals on commodity earbuds. A novel feature fusion method is proposed to integrate raw signals and prior knowledge from both modalities, improving the system’s interpretability and accuracy. EarCO achieves a lower error of 1.080 L/min in the leave-one-subject-out settings with 62 subjects, making cardiovascular health monitoring accessible and practical for daily use. |

|

abstract /

bibtex

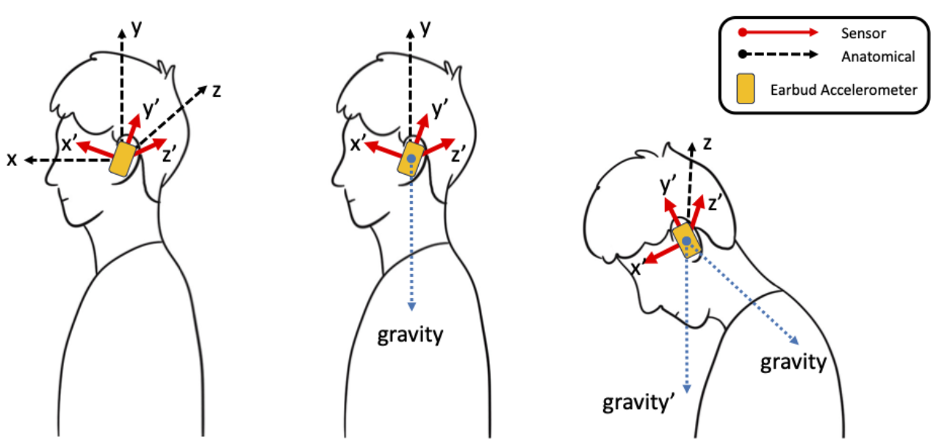

The earbud-based ballistocardiogram (BCG) holds significant promise for monitoring diverse physiological signals, including stress, cardiac activity, and blood pressure. However, unlike traditional methods that measure the force component along the head-to-foot axis for enhanced BCG signal quality, ear-worn devices are prone to orientation misalignment, leading to significant variations in BCG morphology. To address this challenge, we propose two novel algorithms: one that employs sensor-to-body-segment calibration and another that applies a calibration-free, physiologically informed axis fusion method to enhance earbud-based BCG signals. We evaluate the performance of these approaches against existing methods in the literature, focusing on heart rate variability (HRV) estimation and morphological feature extraction. Through a comprehensive investigation, we aim to identify optimal strategies for obtaining high-quality BCG signals using ear-worn devices. |

|

abstract /

bibtex

This paper examines the potential of commercial earbuds for detecting physiological biomarkers like heart rate (HR) and heart rate variability (HRV) for stress assessment. Using accelerometer (IMU) and photoplethysmography (PPG) data from earbuds, we compared these estimates with reference electrocardiogram (ECG) data from 81 healthy participants. We explored using low-power accelerometer sensors for capturing ballistocardiography (BCG) signals. However, BCG signal quality can vary due to individual differences and body motion. Therefore, BCG data quality assessment is critical before extracting any meaningful biomarkers. To address this, we introduced the ECG-gated BCG heatmap, a new method for assessing BCG signal quality. We trained a Random Forest model to identify usable signals, achieving 82% test accuracy. Filtering out unusable signals improved HR/HRV estimation accuracy to levels comparable to PPG-based estimates. Our findings demonstrate the feasibility of accurate physiological monitoring with earbuds, advancing the development of user-friendly wearable health technologies for stress management. |

|

abstract /

bibtex

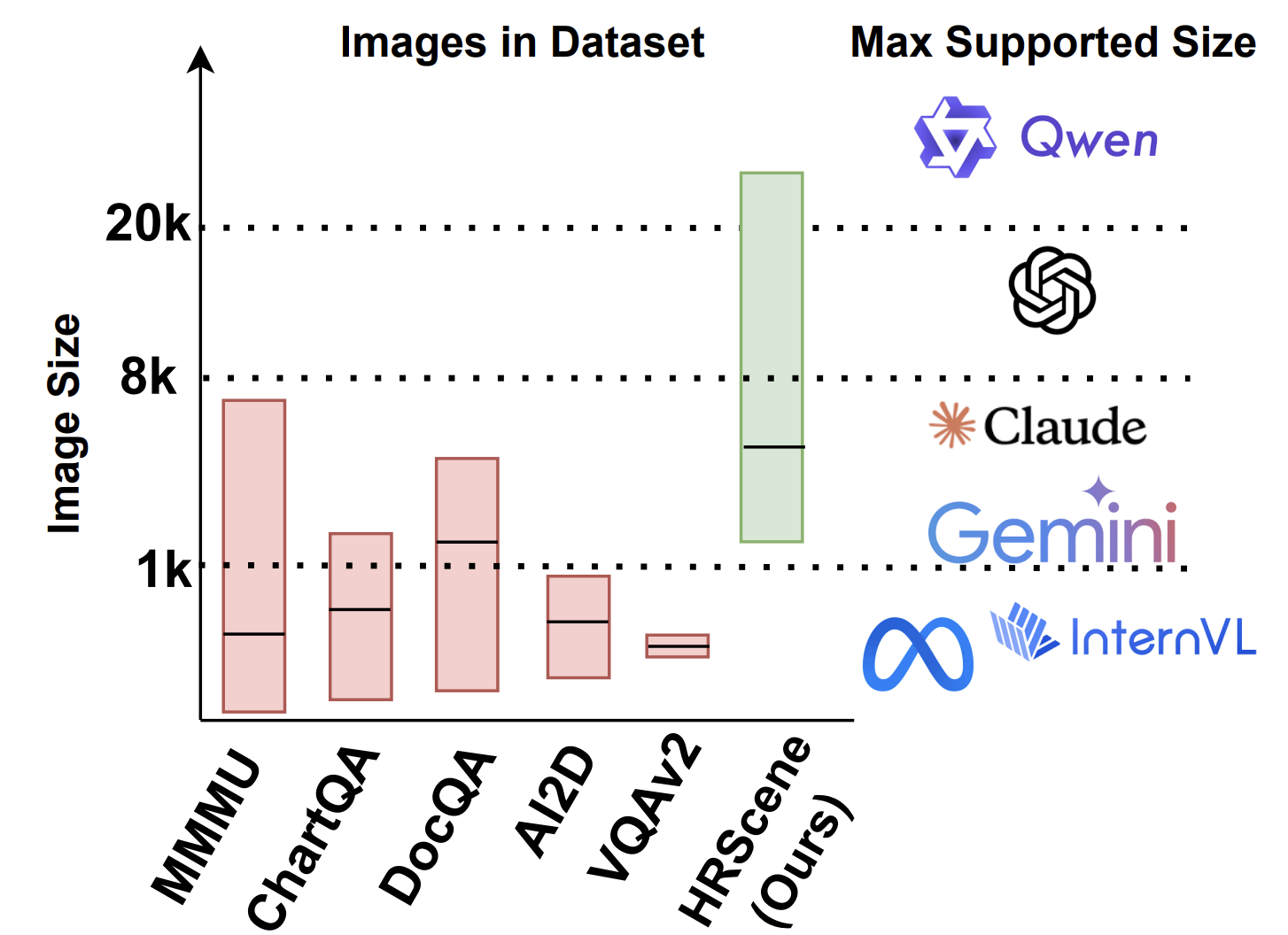

High-resolution image (HRI) understanding aims to process images with a large number of pixels, such as pathological images and agricultural aerial images, both of which can exceed 1 million pixels. Vision Large Language Models (VLMs) can allegedly handle HRIs, however, there is a lack of a comprehensive benchmark for VLMs to evaluate HRI understanding. To address this gap, we introduce HRScene, a novel unified benchmark for HRI understanding with rich scenes. HRScene incorporates 25 real-world datasets and 2 synthetic diagnostic datasets with resolutions ranging from 1,024 × 1,024 to 35,503 × 26,627. HRScene is collected and re-annotated by 10 graduate-level annotators, covering 25 scenarios, ranging from microscopic to radiology images, street views, long-range pictures, and telescope images. It includes HRIs of real-world objects, scanned documents, and composite multi-image. The two diagnostic evaluation datasets are synthesized by combining the target image with the gold answer and distracting images in different orders, assessing how well models utilize regions in HRI. We conduct extensive experiments involving 28 VLMs, including Gemini 2.0 Flash and GPT-4o. Experiments on HRScene show that current VLMs achieve an average accuracy of around 50% on real-world tasks, revealing significant gaps in HRI understanding. Results on synthetic datasets reveal that VLMs struggle to effectively utilize HRI regions, showing significant Regional Divergence and lostin-middle, shedding light on future research. |

|

abstract /

bibtex /

slides

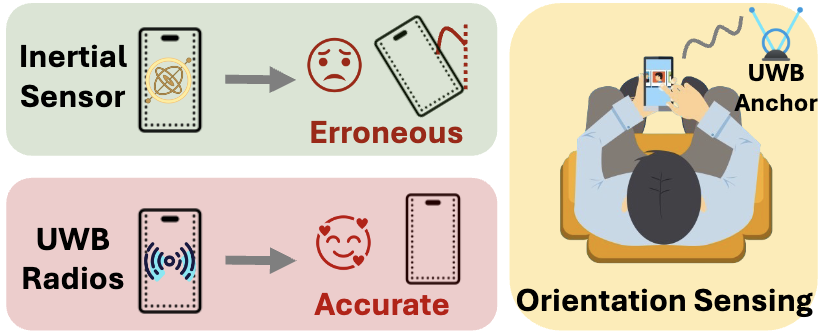

While localization has gained a tremendous amount of attention from both academia and industry, much less attention has been paid to equally important orientation estimation. Traditional orientation estimation systems relying on gyroscopes suffer from cumulative errors. In this paper, we propose UWBOrient, the first fine-grained orientation estimation system utilizing ultra-wideband (UWB) modules embedded in smartphones. The proposed system presents an alternative solution that is more accurate than gyroscope estimates and free of error accumulation. We propose to fuse UWB estimates with gyroscope estimates to address the challenge associated with UWB estimation alone and further improve the estimation accuracy. UWBOrient decreases the estimation error from the state-of-the-art 7.6 degrees to 2.7 degrees whilemaintaining a low latency (20 ms) and low energy consumption (40 mWh). Comprehensive experiments with both iPhone and Android smartphones demonstrate the effectiveness of the proposed system under various conditions including natural motion, dynamic multipath and NLoS. Two real-world applications, i.e., head orientation tracking and 3D reconstruction are employed to showcase the practicality of UWBOrient. |

|

abstract /

bibtex

In this paper, we introduce SmartDampener, an open-source tennis analytics platform that redefines the traditional understanding of vibration dampeners. Traditional vibration dampeners favored by both amateur and professional tennis players are utilized primarily to diminish vibration transmission and enhance racket stability. However, our platform uniquely merges wireless sensing technologies into a device that resembles a conventional vibration dampener, thereby offering a range of tennis performance metrics including ball speed, impact location, and stroke type. The design of SmartDampener adheres to the familiar form of this accessory, ensuring that (i) it is readily accepted by users and robust under real-play conditions such as ball-hitting, (ii) it has minimal impact on player performance, (iii) it is capable of providing a wide range of analytical insights, and (iv) it is extensible to other sports. Existing computer vision systems for tennis sensing such as Hawkeye and PlaySight, rely on hardware that costs millions of US dollars to deploy with complex setup procedures and is susceptible to lighting environments. Wearable devices and other tennis sensing accessories, such as Zepp Tennis sensor and TennisEye, using intrusive mounting locations, hinder user experience and impede player performance. In contrast, SmartDampener, a low-cost and compact tennis sensing device, notable for its socially accepted, lightweight, and scalable design, seamlessly melds into the form of a vibration dampener. SmartDampener exploits opportunities in SoC and form factor design of conventional dampeners to integrate the sensing units and micro-controllers on a two-layer flexible PCB, that is bent and enclosed inside a dampener case made of 3D printing TPU material, while maintaining the vibration dampening feature and further being enhanced by its extended battery life and the inclusion of wireless communication features. The overall cost is $9.42, with a dimension of 21.4 mm × 27.5 mm × 9.7 mm (W × L × H) and a weight of 6.1 g and 5.8 hours of battery life. In proof of SmartDampener’s performance in tennis analytics, we present various tennis analytic applications that exploit the capability of SmartDampener in capturing the correlations across string vibration, and racket motion, including the estimation of ball speed with a median error of 3.59 mph, estimation of ball impact location with accuracy of 3.03 cm, and classification of six tennis strokes with accuracy of 96.75%. Finally, extensive usability studies with 15 tennis players indicate high levels of social acceptance of form factor design for the SmartDampener dampener in comparison with alternative form factors, as well as its capability of sensing and analyzing tennis stroke in an accurate and robust manner. We believe this platform will enable exciting applications in other sports like badminton, fitness tracking, and injury prevention. |

|

abstract /

project page /

bibtex

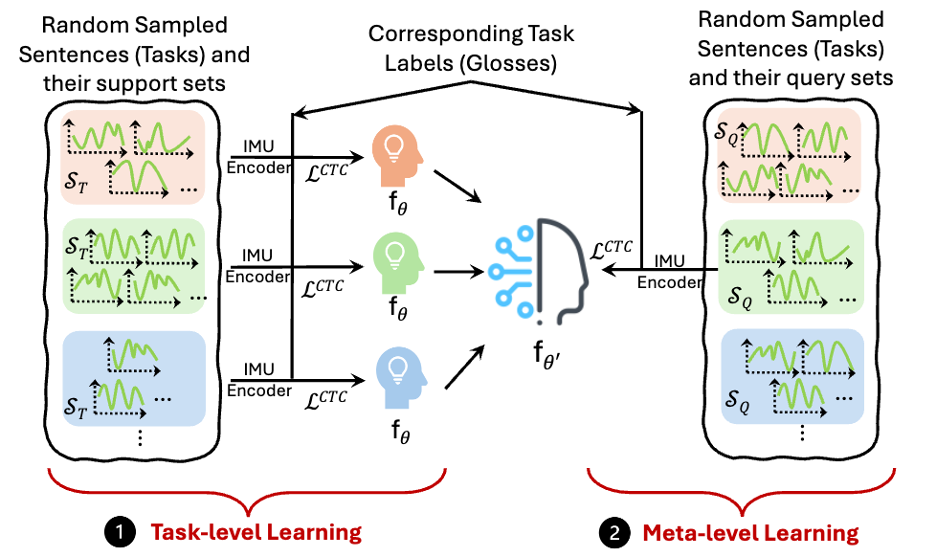

Sign Language is widely used by over 500 million Deaf and hard of hearing (DHH) individuals in their daily lives. While prior works made notable efforts to show the feasibility of recognizing signs with various sensing modalities both from the wireless and wearable domains, they recruited sign language learners for validation. Based on our interactions with native sign language users, we found that signal diversity hinders the generalization of users (e.g., users from different backgrounds interpret signs differently, and native users have complex articulated signs), thus resulting in recognition difficulty. While multiple solutions (e.g., increasing diversity of data, harvesting virtual data from sign videos) are possible, we propose ASLRing that addresses the sign language recognition problem from a meta-learning perspective by learning an inherent knowledge about diverse spaces of signs for fast adaptation. ASLRing bypasses expensive data collection process and avoids the limitation of leveraging virtual data from sign videos (e.g., occlusions, overexposure, low-resolution). To validate ASLRing, instead of recruiting learners, we conducted a comprehensive user study with a database with 1080 sentences generated by a vocabulary size of 1057 from 14 native sign language users and achieved a 26.9% word error rate, and we also validated ASLRing in diverse settings. |

|

abstract /

bibtex /

slides

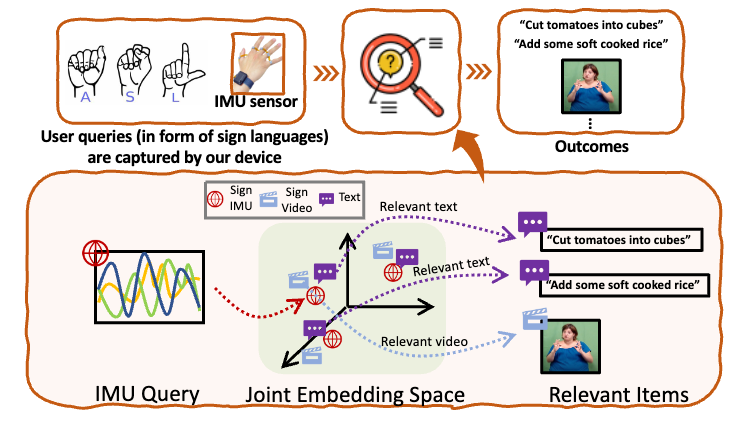

Search Engines such as Google, Baidu, and Bing have revolutionized the way we interact with the cyber world with a number of applications in recommendations, learning, advertisements, healthcare, entertainment, etc. In this paper, we design search engines for sign languages such as American Sign Language (ASL). Sign languages use hand and body motion for communication with rich grammar, complexity, and vocabulary that is comparable to spoken languages. This is the primary language for the Deaf community with a global population of ≈ 500 million. However, search engines that support sign language queries in native form do not exist currently. While translating a sign language to a spoken language and using existing search engines might be one possibility, this can miss critical information because existing translation systems are either limited in vocabulary or constrained to a specific domain. In contrast, this paper presents a holistic approach where ASL queries in native form as well as ASL videos and textual information available online are converted into a common representation space. Such a joint representation space provides a common framework for precisely representing different sources of information and accurately matching a query with relevant information that is available online. Our system uses low-intrusive wearable sensors for capturing the sign query. To minimize the training overhead, we obtain synthetic training data from a large corpus of online ASL videos across diverse topics. Evaluated over a set of Deaf users with native ASL fluency, the accuracy is comparable with state-of-the-art recommendation systems for Amazon, Netflix, Yelp, etc., suggesting the usability of the system in the real world. For example, the recall@10 of our system is 64.3%, i.e., among the top ten search results, six of them are relevant to the search query. Moreover, the system is robust to variations in signing patterns, dialects, sensor positions, etc. |

|

abstract /

bibtex

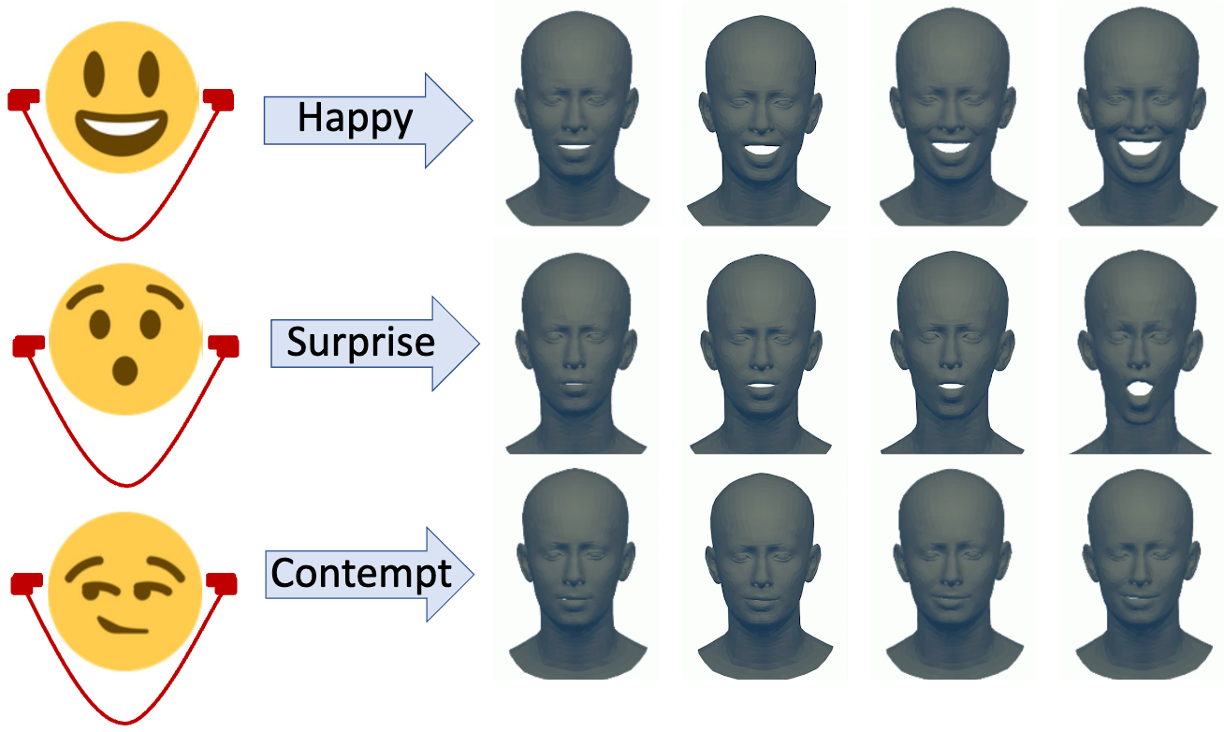

This paper presents EARFace, a system that shows the feasibility of tracking facial landmarks for 3D facial reconstruction using in-ear acoustic sensors embedded within smart earphones. This enables a number of applications in the areas of facial expression tracking, user-interfaces, AR/VR applications, affective computing, accessibility, etc. While conventional vision-based solutions break down under poor lighting, occlusions, and also suffer from privacy concerns, earphone platforms are robust to ambient conditions, while being privacy-preserving. In contrast to prior work on earable platforms that perform outer-ear sensing for facial motion tracking, EARFace shows the feasibility of completely in-ear sensing with a natural earphone form-factor, thus enhancing the comfort levels of wearing. The core intuition exploited by EARFace is that the shape of the ear canal changes due to the movement of facial muscles during facial motion. EARFace tracks the changes in shape of the ear canal by measuring ultrasonic channel frequency response (CFR) of the inner ear, ultimately resulting in tracking of the facial motion. A transformer based machine learning (ML) model is designed to exploit spectral and temporal relationships in the ultrasonic CFR data to predict the facial landmarks of the user with an accuracy of 1.83 mm. Using these predicted landmarks, a 3D graphical model of the face that replicates the precise facial motion of the user is then reconstructed. Domain adaptation is further performed by adapting the weights of layers using a group-wise and differential learning rate. This decreases the training overhead in EARFace. The transformer based ML model runs on smartphone devices with a processing latency of 13 ms and an overall low power consumption profile. Finally, usability studies indicate higher levels of comforts of wearing EARFace’s earphone platform in comparison with alternative form-factors. |

|

abstract /

project page /

bibtex

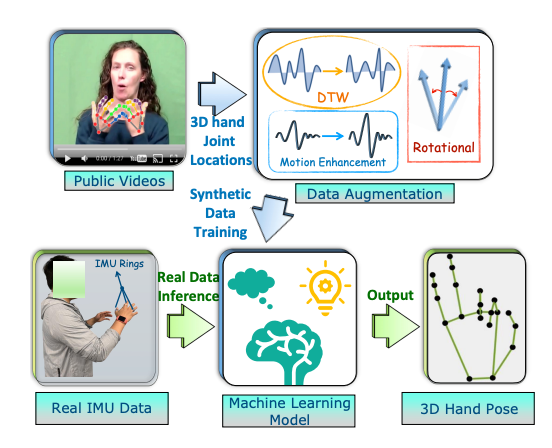

This paper presents OmniRing, an open-source smartring platform with IMU and PPG sensors for activity tracking and health analytics applications. Smartring platforms are on the rise because of comfortable wearing, with the market size expected to reach $92 million soon. Nevertheless, most existing platforms are either commercial and proprietary without details of software/hardware or use suboptimal PCB design resulting in bulky form factors, inconvenient for wearing in daily life. Towards bridging the gap, OmniRing presents an extensible design of a smartring with a miniature form factor, longer battery life, wireless communication, and water resistance so that users can wear it all the time. Towards this end, OmniRing exploits opportunities in SoC, and carefully integrates the sensing units with a microcontroller and BLE modules. The electronic components are integrated on both sides of a flexible PCB that is bent in the shape of a ring and enclosed in a flexible and waterproof case for smooth skin contact. The overall cost is under $25, with weight of 2.5g, and up to a week of battery life. Extensive usability surveys validate the comfort levels. To validate the sensing capabilities, we enable an application in 3D finger motion tracking. By extracting synthetic training data from public videos coupled with data augmentation to minimize the overhead of training data generation for a new platform, OmniRing designs a transformer-based model that exploits correlations across fingers and time to track 3D finger motion with an accuracy of 6.57𝑚𝑚. We also validate the use of PPG data from OmniRing for heart rate monitoring. We believe the platform can enable exciting applications in fitness tracking, metaverse, sports, and healthcare. |

|

abstract /

bibtex /

slides

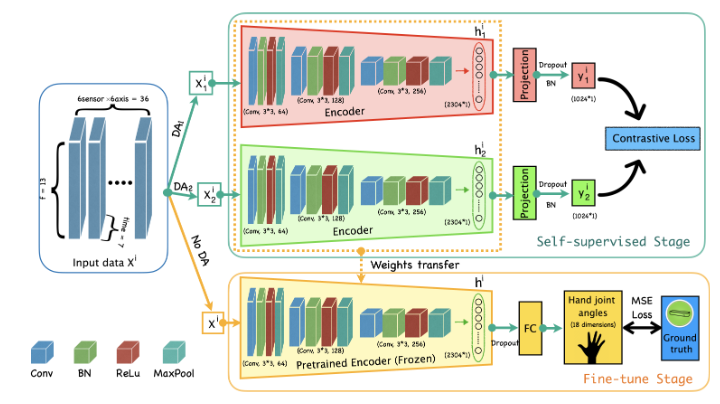

This paper presents ssLOTR (self-supervised learning on the rings), a system that shows the feasibility of designing self-supervised learning based techniques for 3D finger motion tracking using a custom-designed wearable inertial measurement unit (IMU) sensor with a minimal overhead of labeled training data. Ubiquitous finger motion tracking enables a number of applications in augmented and virtual reality, sign language recognition, rehabilitation healthcare, sports analytics, etc. However, unlike vision, there are no large-scale training datasets for developing robust machine learning (ML) models on wearable devices. ssLOTR designs ML models based on data augmentation and self-supervised learning to first extract efficient representations from raw IMU data without the need for any training labels. The extracted representations are further trained with small-scale labeled training data. In comparison to fully supervised learning, we show that only 15% of labeled training data is sufficient with self-supervised learning to achieve similar accuracy. Our sensor device is designed using a two-layer printed circuit board (PCB) to minimize the footprint and uses a combination of Polylactic acid (PLA) and Thermoplastic polyurethane (TPU) as housing materials for sturdiness and flexibility. It incorporates a system-on-chip (SoC) microcontroller with integrated WiFi/Bluetooth Low Energy (BLE) modules for real-time wireless communication, portability, and ubiquity. In contrast to gloves, our device is worn like rings on fingers, and therefore, does not impede dexterous finger motion. Extensive evaluation with 12 users depicts a 3D joint angle tracking accuracy of 9.07◦ (joint position accuracy of 6.55𝑚𝑚) with robustness to natural variation in sensor positions, wrist motion, etc, with low overhead in latency and power consumption on embedded platforms. |

|

abstract /

bibtex /

related (dataset)

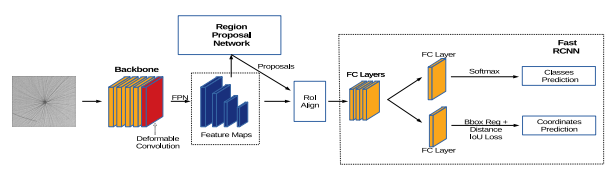

This paper presents a fabric defect detection network (FabricNet) for automatic fabric defect detection. Our proposed FabricNet incorporates several effective techniques, such as Feature Pyramid Network (FPN), Deformable Convolution (DC) network, and Distance IoU Loss function, into vanilla Faster RCNN to improve the accuracy and speed of fabric defect detection. Our experiment shows that, when optimizations are combined, the FabricNet achieves 62.07% mAP and 97.37% AP50 on DAGM 2007 dataset, and an average prediction speed of 17 frames per second. |

|

|

|

Last update on 2025 and Thanks for the template |